Measuring the Impact of Your Design System: Comprehensive Metrics & Cross‑Channel KPIs

Introduction

A modern design system isn’t just a collection of reusable UI components — it’s the connective tissue that ensures your brand’s voice, visuals and interactions are consistent everywhere users encounter you. This consistency is especially important in an omnichannel world. Users move fluidly between desktop websites, mobile apps, chatbots and physical stores, yet they still expect the same values, visual language and functional reliability across each touchpoint[1]. Cross‑channel consistency builds trust and differentiates a brand; mismatched interfaces and fragmented experiences quickly erode that trust[2]. Measuring how well your design system supports this consistency — and how it accelerates work for product teams — helps justify investment and guide improvements.

This report synthesizes guidance from leading practitioners and recent research to provide a robust framework for measuring the impact of your design system. It also introduces cross‑channel KPIs to ensure that your design system supports marketing campaigns across web, mobile, email and other channels. Many of the metrics below can be tracked quantitatively through analytics tools; others require qualitative feedback from the teams using your system.

Why Measurement Matters

- Validate business value. The goal of a design system isn’t adoption for its own sake. Adoption is a lagging indicator; success means enabling your organization to achieve its goals more efficiently and consistently[3]. Measuring ROI, time to market and product conversion rates makes the business case for continued investment.

- Support cross‑channel consistency. Users expect to start an action in one channel and finish it in another without friction[4]. A unified design system is the foundation for delivering a consistent experience across web, mobile, emails, push notifications and offline touchpoints[5]. Cross‑channel performance metrics (e.g., CSAT, NPS, drop‑off rates) help ensure your design system delivers cohesive journeys across campaigns[6].

- Drive continuous improvement. Metrics reveal where the design system performs well and where it needs attention. For example, component insertions vs. detachments highlight if teams are adopting components as intended[7]. Qualitative data from surveys, office hours and support tickets explain why teams may avoid certain components[8].

Key Metrics for Design System Impact

1. Adoption & Usage

What it measures: The extent to which teams use the design system in production.

Why it matters: High adoption correlates with consistency, efficiency and scalability[9]. However, adoption alone is a lagging indicator; it tells you what happened but not why[3]. Combine adoption with leading indicators like early involvement of the design system team[10].

How to measure:

- Overall adoption rate: percentage of products or features built with the design system[11]. Track usage across departments and compare over time[12]. Automated analytics in design tools (e.g., tracking Figma/GitHub component usage) can provide accurate counts[13].

- Team‑level adoption: identify which teams use which components and where[14]. Qualitative metrics like satisfaction surveys, contributions and participation in “office hours” sessions reveal attitudes toward the system[14].

- Early involvement metric: measure how early feature teams invite the design system team to planning meetings or sprint kickoffs. Dan Mall argues this is a strong leading indicator: earlier involvement leads to more successful design systems[15].

- Component insertions vs. detachments: track how many times components are inserted into projects versus detached (modified)[16]. A high detachment rate signals that components don’t fit real‑world needs.

2. Component Reusability & Design Consistency

What it measures: How often pre‑built components are reused instead of custom solutions, and the degree to which teams adhere to the design system’s standards.

Why it matters: High reusability drives efficiency and reduces design/development time[17]. Consistency ensures a cohesive user experience[18] and reduces errors.

How to measure:

- Reusability ratio: ratio of reused components to custom-built components[19]. Track imports of design system packages or Figma library components across projects[20].

- UI adherence: number of screens or elements that fully follow the design system guidelines versus deviations[21]. Use design linting tools (e.g., Figma’s Design Lint) to automate checks[22].

- Component usage across products: monitor reuse of components across products to see which teams benefit most[23].

- Products using different libraries: track the percentage of products using the coded libraries or Figma libraries — a key adoption KPI[24].

3. Efficiency & Time‑to‑Market

What it measures: How the design system accelerates design, development and release cycles.

Why it matters: The primary business value of a design system is to reduce time and effort to launch features. UXPin notes that teams should measure team efficiency, speed to market and effect on code before and after adopting a design system[25]. Reduced time to implement frees up resources for innovation and reduces costs[26].

How to measure:

- Baseline vs. post‑system timing: measure how long it takes to build a new product or component without the system versus with it[27]. Calculate average time to design a single component and average design hours per month, then compare[28].

- Reduction in code changes: track how many lines of code developers change per release; fewer changes indicate more reusable components[29].

- Average task completion time: compare time spent on design/development tasks before and after the design system[30].

- Time to market: measure the time from idea to release; many organizations use this KPI to evaluate design system impact[31].

4. Contribution & Collaboration

What it measures: How actively designers and developers contribute to and engage with the design system.

Why it matters: A living design system thrives on contributions and collaboration. High contribution rates indicate that the system is evolving and addressing new use cases[32].

How to measure:

- Contribution rate: number of new components, improvements or bug fixes added to the system, and percentage of team members contributing[33]. Track pull/merge requests in your repository.[34]

- Participation metrics: monitor attendance at design system office hours, workshops and retrospectives[14].

- Support requests and qualitative feedback: gather questions and suggestions via support channels and surveys[8].

5. Performance, Accessibility & Scalability

What it measures: How the design system affects application performance, meets accessibility standards and scales across platforms.

Why it matters: A design system that degrades performance or fails accessibility guidelines undermines user experience.

How to measure:

- Performance impact: evaluate page load times and bundle size when using design system components[35]. Use tools like Google Lighthouse to compare performance with and without the system[36].

- Accessibility compliance: percentage of components meeting WCAG standards and number of accessibility issues resolved[37]. Use automated accessibility scanners and manual audits.[38]

- Scalability: track how easily components adapt to new platforms (web, mobile, desktop) and how quickly new products launch using the design system[39].

- User satisfaction: measure internal user feedback and external user satisfaction with interfaces built on the design system[40].

6. Business & Cross‑Channel Impact

What it measures: The downstream effects of the design system on business outcomes and cross‑channel marketing campaigns.

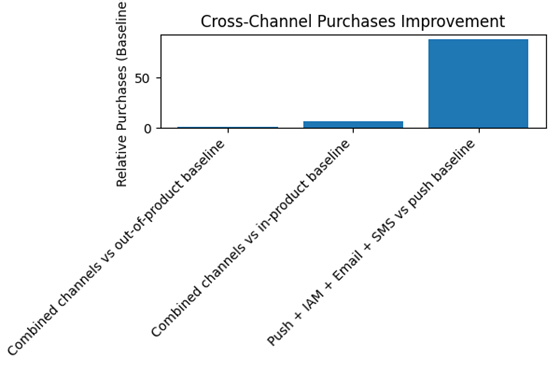

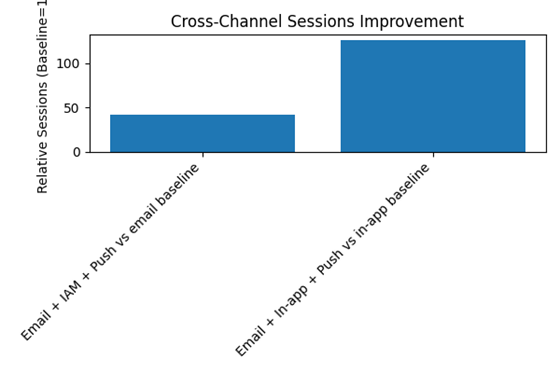

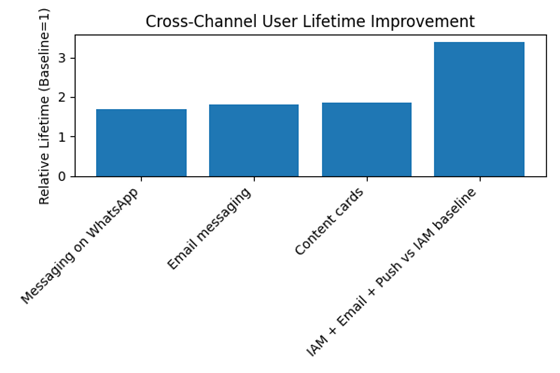

Why it matters: Ultimately, a design system should help achieve business goals—more conversions, higher retention, and stronger brand recognition. Cross‑channel marketing research by Braze shows that engaging users across multiple channels significantly improves key metrics. Messaging users on both in‑product and out‑of‑product channels results in 25 % more purchases per user compared with out‑of‑product channels alone and 6.5× more purchases per user compared with in‑product channels alone[41]. When brands combine email, in‑app messages, mobile push and web push, they see an 126× higher average sessions per user than in‑app only[42]. Contacting users via WhatsApp increases user lifetime by 70 %, email messaging by 81 % and content cards by 86 %[43]. These improvements illustrate the power of cross‑channel engagement.

How to measure:

- Conversion rate: track conversions from campaigns built with your design system. Compare cross‑channel campaigns (email + push + in‑app) against single‑channel campaigns[44].

- Open and click‑through rates: for email and push notifications; these metrics indicate how well your design adapts to channel constraints[44].

- Customer satisfaction (CSAT) & Net Promoter Score (NPS): monitor how users perceive your brand across channels and whether they’d recommend it[6].

- Drop‑off rates and funnel progression: analyze where users abandon tasks across channels to identify friction.

- Cross‑channel session and purchase lift: measure relative improvements in sessions and purchases when multiple channels are combined. The charts below visualize some of these improvements.

Visualizing Cross‑Channel Impact

The following charts translate cross‑channel research findings into visual summaries. Each chart shows the relative improvement compared with a baseline (single channel). For example, “Combined channels vs out‑of‑product baseline” means that messaging users on both in‑product and out‑of‑product channels yields 1.25× more purchases per user than using out‑of‑product channels alone[41].

Purchases per User

Sessions per User

User Lifetime

7. Maintaining & Evolving the System

Design systems are living products. Ongoing monitoring and maintenance ensure components continue to serve teams and users[45]. To sustain momentum:

- Monitor insertions and detachments: Look for plateaus or drops in component insertions and investigate detachments to understand unmet needs[16].

- Collect qualitative feedback: Office hours, quarterly surveys and direct conversations with product teams provide context behind the numbers[8].

- Use metrics to tell a story: Metrics should inform decisions, not just fill dashboards. As Intuit’s Madison Mathieu notes, metrics “validate our efforts, guide our improvements, and align our creative endeavors with the larger goals of the organization”[46].

- Align with business goals: Tie design system work to organizational objectives. Dan Mall advises connecting design system metrics to product value and roadmaps[47]. This ensures that leadership sees the design system as integral to achieving strategic outcomes.

Strategies for Improving Design System Adoption & Cross‑Channel Performance

- Be a teacher and advocate. Promote the design system through documentation, workshops and one‑on‑one support. When teams understand how the system saves them time, they’re more likely to use it[48].

- Kill complexity. Simplify components and guidelines. Over‑engineering makes the system hard to use; aim for intuitive components that solve real problems[49].

- Go beyond Figma. A design system is more than a Figma library; ensure that coded components, documentation and governance are in place[50].

- Tell a story over time. Report metrics at regular intervals (quarterly or bi‑annually) to show progress and highlight areas for improvement[51]. Run baseline measurements before major updates and track improvement after[27].

- Align across teams around the customer journey. Break down silos by mapping how customers move across channels and involving marketing, product and UX teams[52]. A unified design system ensures consistent experiences across these touchpoints.

- Adopt a unified data flow. Ensure that user data flows seamlessly between channels so experiences remain cohesive[53]. Use cross‑channel analytics and predictive analytics to trigger messages based on real‑time behavior[54].

- Test across channels and iterate. Conduct cross‑channel usability tests to identify friction points[55]. Use A/B testing to evaluate component variations and campaign messaging[56].

Conclusion

Measuring the impact of a design system requires a blend of quantitative and qualitative metrics — adoption rates, reusability ratios, speed to market, contribution rates, performance scores and business KPIs. It also requires a cross‑channel perspective: in today’s omnichannel environment, your design system must support cohesive experiences across email, web, mobile apps, push notifications and physical touchpoints. When teams track the right metrics and act on the insights they reveal, a design system becomes more than a set of components — it becomes a strategic asset that drives efficiency, consistency and customer satisfaction across every channel.

[1] [2] [4] [5] [6] [52] [53] [55] Cross-Channel Consistency: Maintaining UX in an Omnichannel World | by Robert “Joey” Werkmeister | Bootcamp | Medium

[3] [10] [15] “In Search of a Better Design System Metric than Adoption,” an article by Dan Mall

https://danmall.com/posts/in-search-of-a-better-design-system-metric-than-adoption/

[7] [8] [16] [45] Metrics – Redesigning Design Systems

https://redesigningdesign.systems/component-process/metrics

[9] [11] [13] [17] [18] [19] [20] [21] [22] [26] [32] [33] [34] [35] [36] [37] [38] [39] [40] Measuring Design System. The effectiveness of a design system… | by uxplanet.org | UX Planet

https://uxplanet.org/measuring-design-system-09cfe75f68ec

[12] [23] [24] [30] [31] The Design System Metrics Collection

https://thedesignsystem.guide/design-system-metrics

[14] [46] [47] [48] [49] [50] [51] How design system leaders define and measure adoption · Omlet

https://omlet.dev/blog/how-leaders-measure-design-system-adoption/

[25] [27] [28] [29] How to Evaluate Your Design System’s Impact with Metrics?

https://www.uxpin.com/studio/blog/design-system-metrics/

[41] [42] [43] [54] What Is Cross-Channel Marketing? Benefits & Examples | Braze

https://www.braze.com/resources/articles/cross-channel-engagement-matters

[44] [56] Cross-Channel Campaign Management 101 and Templates | Smartsheet

https://www.smartsheet.com/content/cross-channel-campaign-management